NVIDIA announced next-gen AI supercomputer chips, potentially pivotal for deep learning breakthroughs

NVIDIA is gearing up for the 2024 release of its next-generation AI supercomputer chips, anticipated to significantly impact deep learning and large language models (LLMs) like OpenAI's GPT-4. The centerpiece of this launch is the HGX H200 GPU, based on NVIDIA Hopper architecture, upgrading from the H100 GPU. The H200, the first to utilize HBM3e memory, promises faster speeds and greater capacity, tailoring it for large-scale LLMs. It is expected to offer 141GB of memory at 4.8 terabytes per second, nearly doubling the capacity and enhancing bandwidth by 2.4 times compared to the A100.

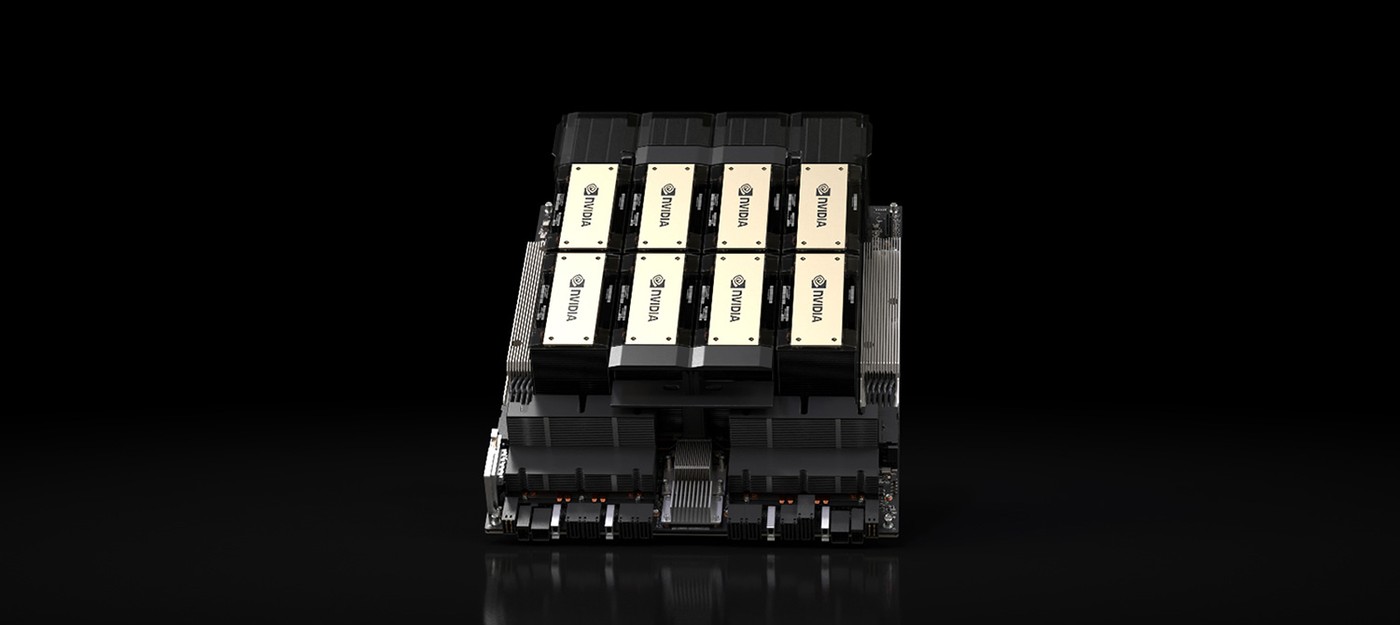

NVIDIA's H200 is projected to double the inference speed on Llama 2, a 70 billion-parameter LLM, compared to the H100. It will be available in 4 and 8-way configurations and is set to be used across various data center types by leading cloud service providers, with shipments starting in the second quarter of 2024.

Additionally, the GH200 Grace Hopper "superchip" will merge the HGX H200 GPU and NVIDIA's Grace CPU, connected via NVLink-C2C interconnect. This chip is designed for high-performance supercomputers, aiming to accelerate AI and HPC applications handling extensive data processing.

The GH200 is expected to be featured in over 40 AI supercomputers worldwide, including significant deployments like the Cray EX2500 from HPE. Moreover, the JUPITER supercomputer at the Jülich Research Center in Germany, which will utilize the GH200, is poised to become the world's most potent AI system post-installation in 2024.

These upcoming technologies from NVIDIA, which have contributed significantly to the company's revenue growth in AI and data center segments, are set to continue NVIDIA's dominance in the sector and facilitate groundbreaking advancements in various fields, including climate forecasting, drug discovery, and quantum computing.